Why India Needs to Shape AI and Future of Work Narratives Delicately

Indians might not lose jobs due to AI as per the way the social media makes you think.

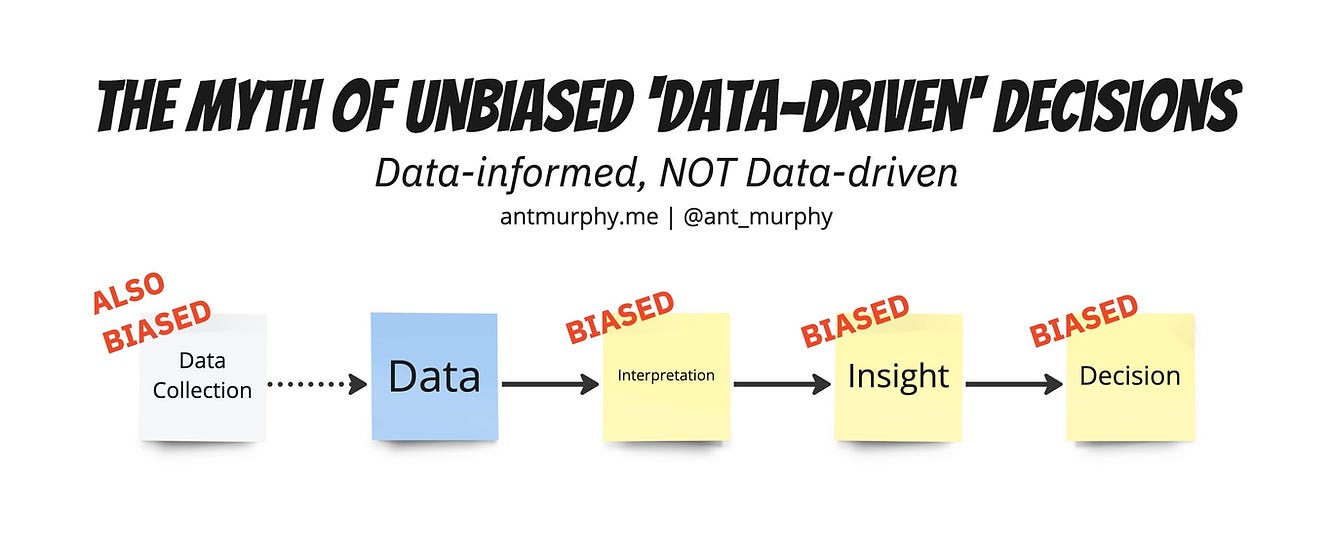

When we anticipate the way future of work impacts all of us, the canonical understanding has always been about something replacing something, but also creating newer avenues and opportunities. However, the way work specifics are displaced, and replaced with other specifics, the human element involved in changing and shaping them matters a lot. One can argue that data may drive certain facets of decision-making, but in reality, data alone cannot be the harbinger of any policy or industry analyses. In fact, one must be data-informed, and not data-driven.

That being said, let's get right into the topic. The title of this article for The Bharat Pacific means that India, as a country needs to shape the narratives of AI and the Future of Work delicately. Now, why is that the case?

First, India is a genuine country, which can represent the concerns of the Global South, apart from Brazil. The geopolitical and diplomatic advantages of the Indian ecosystem makes it easier for the country to shape AI + Future of Work narratives.

Why narratives? Well, the current "AI" that is the talk of the town, doesn't include any pre-Generative AI "deliverable" at best. It means that all the narratives around AI "replacing" some humans in any task, make a clear reference to AI "Agents" (again, generative AI), or generative AI workflows or generative AI systems.

This means that when one bases the narratives about the future of work based on a specific class of machine learning / AI systems, it's futile to bring all forms of "AI" to the same pedestal. All AI systems don't function the same way, and their limitations aren't the same either. This is why I have made this clarification on "narratives".

Now - let's understand the second most requirement why a narrative needs to be shaped beyond geopolitical necessities.

The Global South is a fragmented bloc of countries when it comes to tech sovereignty.

Most countries except few like India, Brazil, and others - have no idea how data protection laws, intellectual property provisions in their domestic laws, and other general legal conditions shape their facet of sovereignty. While, the European / UN Charter-based idea of sovereignty has always been about asserting the sovereignty of a natural person (who is a citizen) based of a particular country, so that the government of that nation-state asserts "tech" sovereignty (or cyber sovereignty), and provides rights to the citizen(s), the Global South countries still need to come up with reasonable technology governance frameworks. In addition, relying on China alone to shape the Global South's issues is deeply problematic, since it gives Beijing a free hand on shaping many issues in multilateral forums, thereby confusing many Global South countries. India's position is unique for it being a democracy with context-specific and productive economic-diplomatic relations.

Even China’s market is not that “perfect” as everyone assumes.

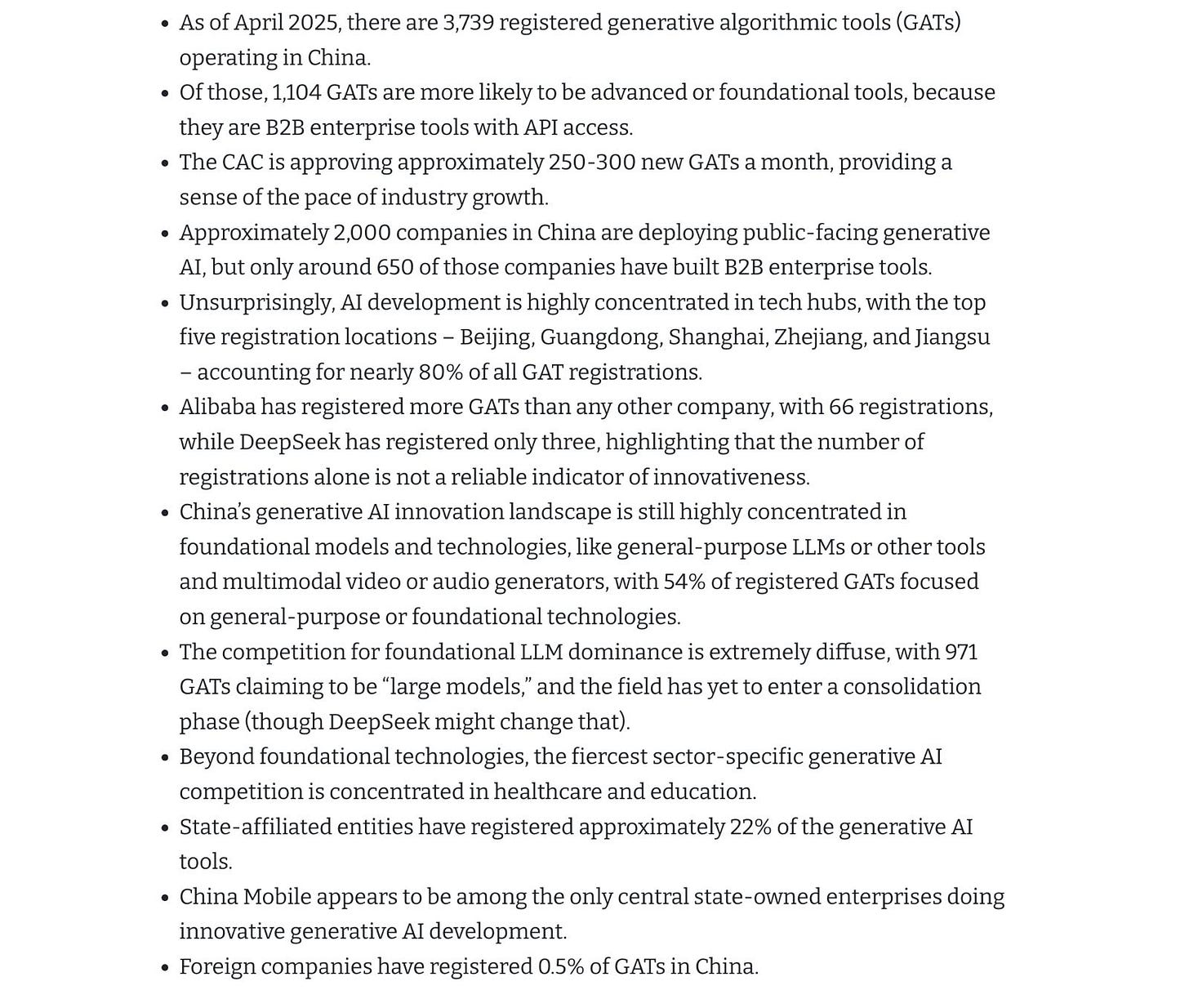

This brings us to the critical importance of being data-informed when analyzing the global AI landscape, especially when considering the narratives shaped by major powers like China. A recent analysis of China's generative AI registration data provides a stark, quantitative look into the reality of its ecosystem, moving beyond rhetoric and speculation. This data reveals a landscape that is not necessarily a coordinated march towards global leadership, but rather a chaotic and fiercely competitive domestic scramble.

This data-informed perspective reveals several key dynamics that underscore why India's role in shaping a separate narrative is so vital:

A Focus on Foundational Tech, Not Just Applications: The analysis shows that China’s AI development is overwhelmingly concentrated in building core technologies. A staggering 54% of its 3,739 registered generative AI tools are classified as "general-purpose" or "multimedia/creative," with hundreds of companies building their own foundational models. This reality—which led Baidu's CEO to warn of a "waste of resources"—contrasts sharply with simplistic narratives of AI seamlessly replacing specific job functions. The competition is still largely at the foundational layer, not in mature, sector-specific applications.

Significant State Influence: The data confirms deep state involvement, with approximately 22% of all registered AI tools being linked to state-affiliated entities. While State-Owned Enterprises (SOEs) like China Mobile are active, the analysis notes that many of these state-led projects lack true in-house innovation and often rely on partnerships with private tech firms. This shows that China's AI strategy is deeply intertwined with its state objectives and industrial policy, serving as a testing ground for large-scale applications rather than a model for open, global collaboration.

A Diffuse and Unconsolidated Market: Far from being a monolithic force, China's AI scene is described as an "all-out scramble at the starting line," with over 2,000 companies deploying public-facing generative AI tools. The field has yet to consolidate, highlighting a period of explosive, and perhaps inefficient, competition.

But beyond diplomacy, the dynamics of AI + future of work are so unique that a testbed and hub of talent like India is macro-wise sufficient enough to absorb the assumed effects and changes of the Generative AI + future of work dynamic.

That way, the Government of India can gather feedback on labour security risks, and at the same time guide other nations around AI adoption beyond the usual rhetoric on "digital public infrastructure" on which many people have written the same books featuring the same people, with the same narratives. Funnily, this reminds me of Hindi cinema producers and directors making films with the same kinds of stories, narratives and twists to woo their audiences to "earn well", because they used to think that "this is what the market wants to have".

No, just because "customer is king", it doesn't mean you exploit them. Similarly, in the case of AI, if you create a narrative loop around Generative AI and future of work based on narratives being told in foreign countries, you will not achieve or understand anything what may happen in India.

Here are some initial inferences:

Inference 1: China's "Model" is a Resource Trap, Not a Blueprint.

The fact that China's ecosystem is locked in a resource-intensive race to build hundreds of foundational models is a cautionary tale. The clear inference is that China's current path is not a strategic blueprint for the Global South, but a high-burn, capital-heavy arms race that is impractical for most nations to replicate. This creates an opening for India to champion a narrative of frugal, targeted innovation and high-impact applications, rather than a resource-draining "build-it-all" approach.Inference 2: Adopting the Chinese Narrative Means Importing Authoritarian Governance.

With deep state involvement in over 20% of AI tools, the inference is that China's AI governance is inherently fused with state control and industrial policy. Adopting this narrative isn't merely a technical choice; it's an ideological one that implicitly exports a framework of digital authoritarianism. For the democracies of the Global South, this is a non-starter, making India's push for a transparent, rights-based governance framework not just an alternative, but a strategic necessity.Inference 3: A Strategic Vacuum Exists for India to Fill—Right Now.

The most crucial inference is that China's market is an unconsolidated "scramble at the starting line." This means there is no single, mature "China model" for the Global South to follow yet. They are still figuring it out. This creates a strategic vacuum. India doesn't have to react to a dominant Chinese narrative; it has a window of opportunity to be proactive and establish the primary, alternative paradigm for AI development and governance while others are still in disarray.

Work Shift Paradigms are about Work Quality

As I had discussed about AI and future of work in our recent ISAIL Delhi Meet-up, here are some honest truths around AI and work quality that I can easily vouch for.

Maybe many people are reducing the quality of work expectations of any entry-level person, and this trend has been a thing for around a decade.

The COVID-19 pandemic upended the way we work, shaking the foundations of industries worldwide. Of course, we became more tech-savvy, and more online. Then came the generative AI (GenAI) wave, accompanied by relentless hype since 2023, which exposed deeper cracks in the job market.

But let’s be clear: the erosion of meaningful roles in the service sector isn’t a byproduct of AI’s rise. It’s the result of deliberate choices that have devalued skilled labor for years, with GenAI serving as a convenient scapegoat.

Take law firms, for instance—not just in India but globally.

The role of a law associate, once a position of rigorous analysis and advocacy, has been quietly downgraded in many places to that of a "note-taker" or "assistant." This isn’t because AI suddenly made legal expertise obsolete. Long before the GenAI boom, firms leaned on document management systems and cost-cutting measures to prioritize senior partners’ billable hours.

The result? Junior associates are sidelined into rote tasks, their potential squandered in the name of efficiency.

In the policy world, a similar story unfolds.

Post-graduate researchers, armed with advanced degrees, are increasingly funneled into "research assistantships" that offer meager pay and even less intellectual involvement. This isn’t a GenAI-driven phenomenon—budget constraints and hierarchical academic cultures have long undervalued original research.

The 2023-2025 AI hype, with its promise of automated summarisation tools, has only given institutions an excuse to further diminish these roles, reducing scholars to mere support staff.

Politicians, too, contribute to this trend. Parliamentary researchers, tasked with informing policy and legislation, are often relegated to "paper pushers" by leaders more focused on optics than substance. This isn’t a new issue sparked by AI’s rise; it’s a reflection of political incentives that prioritize short-term wins over deep, evidence-based work.

The hype around AI tools capable of churning out reports has only made it easier for decision-makers to sideline researchers, further eroding the value of their expertise.

Then there’s the world of management consulting, where entry-level consultants—fresh from MBAs, PGPs, or BBAs at Big 4 firms or larger consultancies—are often reduced to "PPT-drafting" drones.

Their work leans heavily on vibe-driven marketing of policy and fiscal ideas. This shift predates GenAI, rooted in the commoditisation of consulting services since the 1990s.

The AI hype of 2023-2025 has merely accelerated this, with tools generating slide decks used as an excuse to limit consultants’ scope.

Engineered Failures, Not AI’s Fault

None of these trends were sparked by generative AI or the overblown excitement peddled by a few AI researchers and countless uninformed influencers between 2023 and 2025. These are engineered failures—deliberate moves to reshape or hollow out the job market. Maybe there are 2 kinds of “failures” which one can notice based on proper market updates.

Work Quality Deprecation or Appreciation as one “Failure”

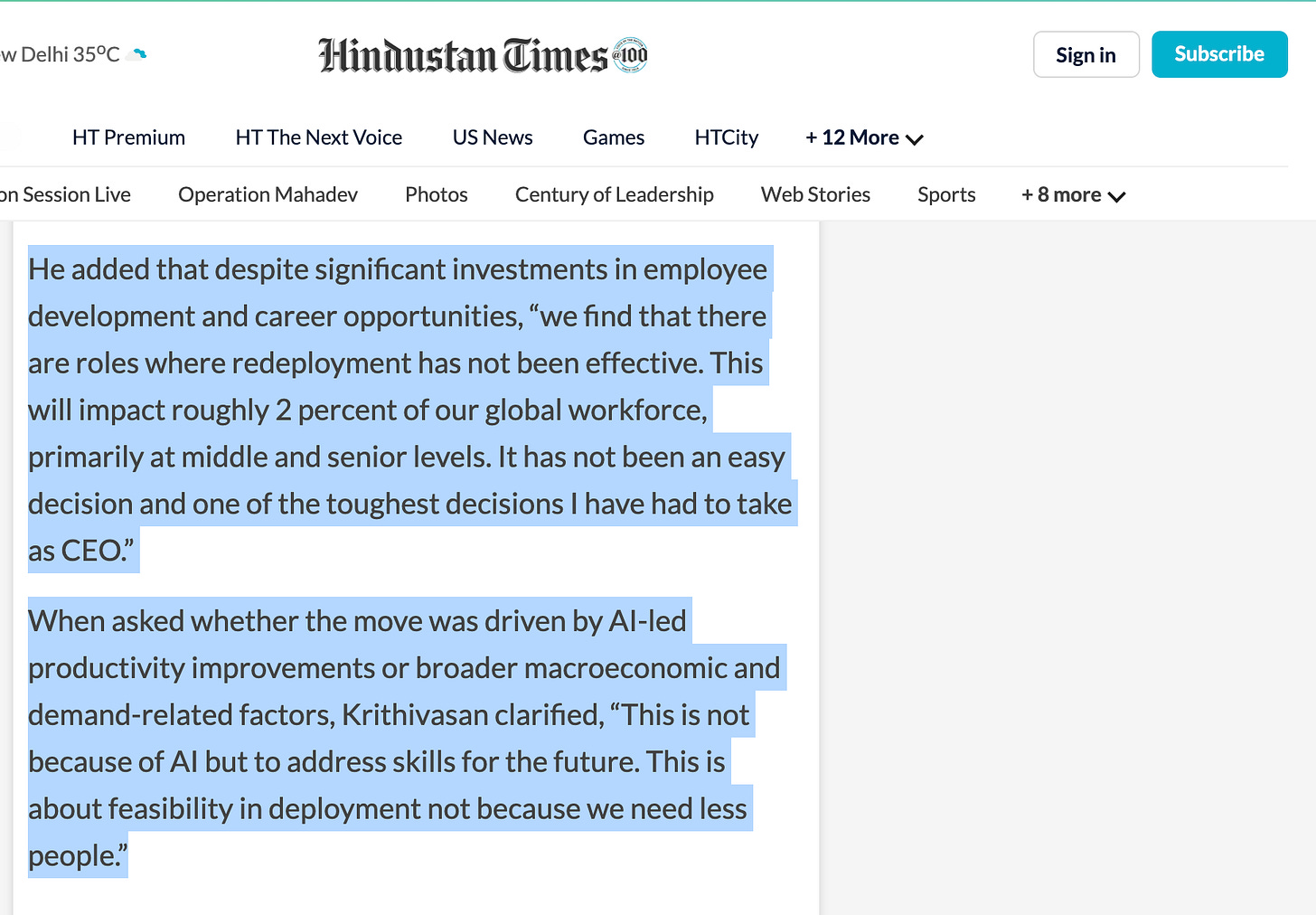

Recently, the CEO of Tata Consultancy Services also emphasised why layoffs were planned of 12,000 people. As you can see it from the source - it’s pretty obvious what he meant.

A lot of people at middle and senior levels were affected, roughly 2% of the global workforce of TCS, which indirectly meant that since the company has to address “skills for the future” and “feasibility in deployment”, it definitely has to do with the fact that a lot of senior and middle-level folks do not understand current digital tech realities.

Ironically that applies to a lot of management and legal professionals as well at middle and senior levels, who do not intend to go beyond their bias of understanding ideas the old way.

Distortion of Technology Research and Academic Trajectories as another “Failure”

The rapid progress of LLMs since the early 2020s—marked by models like GPT-3, PaLM, and LLaMA—has been driven by scaling compute, data, and model parameters. However, as you note, the development curve is becoming unclear due to persistent behavioural failures.

These include issues like hallucination (generating false information), inconsistent reasoning, sensitivity to prompt phrasing, and limited generalisation beyond training data.

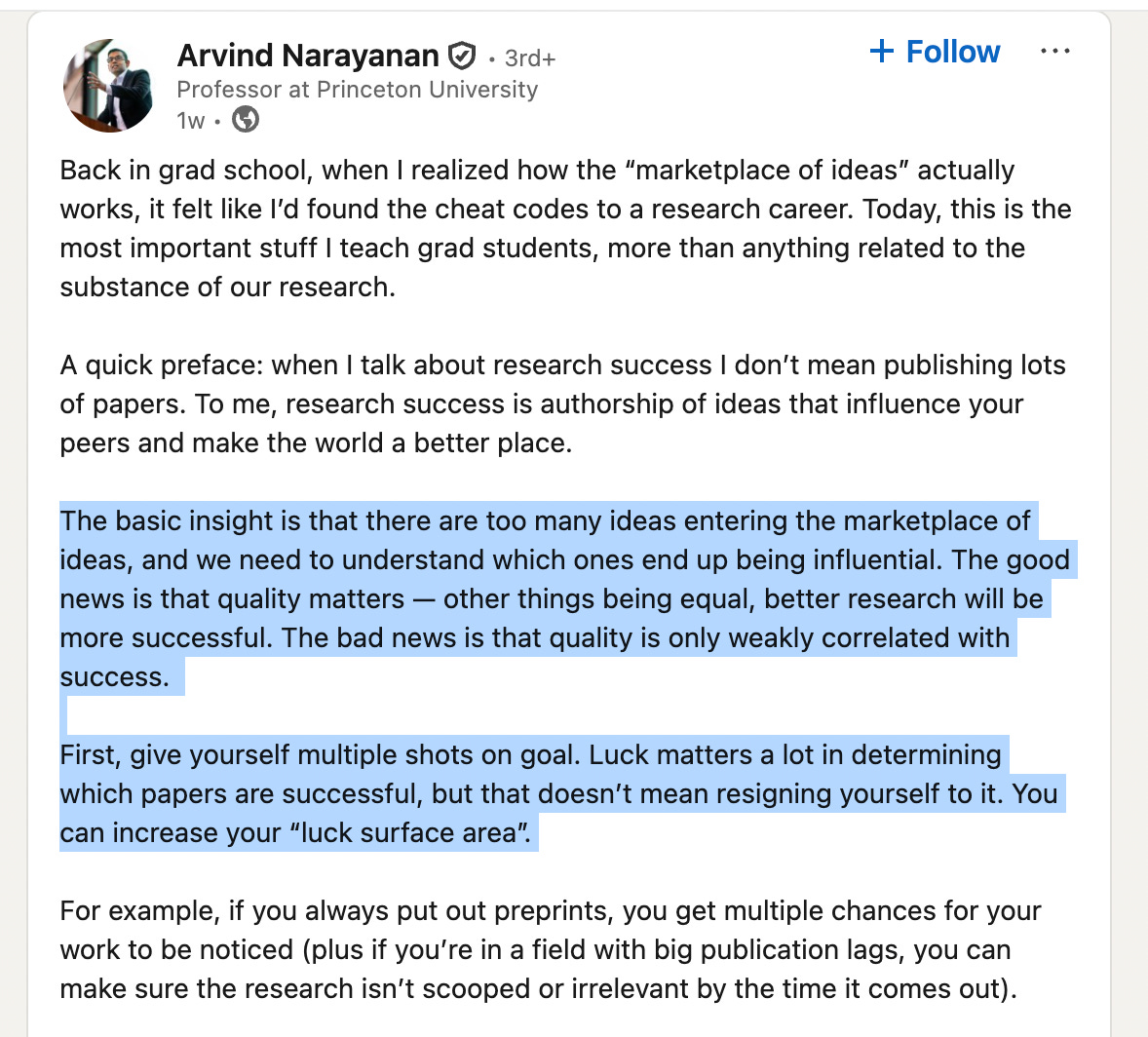

Despite massive investments in compute, recent models (e.g., those post-2023) have shown diminishing returns. For example, scaling from GPT-3 (175 billion parameters) to GPT-4-level models hasn’t consistently delivered proportional improvements in reliability or reasoning, as noted by researchers like Arvind Narayanan, Dr Gary Marcus, and Prof. Subbarao Kambhampati.

LLMs struggle with tasks requiring deep reasoning, contextual understanding, or robustness across diverse scenarios. For instance, they can excel at generating fluent text but falter on simple logical puzzles or factual accuracy without extensive fine-tuning.

These failures aren’t just technical hiccups; they suggest fundamental limitations in the current paradigm of scaling LLMs. In fact, the LLMs’ inability to reliably reason or avoid biases risks overhyping their capabilities, which could mislead developers and policymakers.

In fact - it has:

This is an excerpt from the BRICS Leaders’ Statement On the Global Governance of Artificial Intelligence [PDF] which clearly shows that this statement around artificial general intelligence is more or less confusing, especially since it is under some “road ahead” subtitle. The statement had this included because governments were pushed that AGI is a slight possibility, which might invite policy reactions and interventions. Sadly, the statement on AGI seems as if it has been copy-pasted from a conversational LLM chatbot, since it uses phrases like “exacerbate inequalities” and “raising serious challenges”, which are pretty common words used when LLM outputs are seen.

Second, the scientific basis of AGI remains unclear because a lot of narratives that are constructed around this idea, remain half-baked, and riddled with serious errors that even scientists are falling into the trap of hype. You can see the excerpts of an article showcased above, on achieving a higher form of intelligence within the world of AI, and how it diverges from the biological view of intelligence per se.

The Role of Compute and Jevons Paradox

The Jevons Paradox, originally an economic concept, applies here: as compute becomes more efficient (e.g., through optimized hardware or algorithms), its consumption increases due to greater demand for AI applications. This paradox complicates AI development.

While compute efficiency has improved (e.g., via specialized chips like TPUs), the demand for larger models and broader applications (e.g., real-time translation, autonomous systems) has skyrocketed, offsetting gains.

This creates a cycle where more compute doesn’t necessarily translate to better models, as the core architectural limitations of LLMs remain unaddressed. Instead, resources are funneled into scaling rather than rethinking foundational approaches, potentially stalling innovation.

Now, India, with its vast pool of STEM graduates and growing AI ecosystem, faces a unique challenge.

The hype around LLMs—fueled by global tech giants and local startups—has drawn thousands of young professionals into AI development.

However, if the field remains fixated on scaling LLMs without addressing their flaws, talent may be misdirected toward incremental, low-impact work (e.g., fine-tuning existing models) rather than groundbreaking research in areas like neurosymbolic AI or alternative architectures.

This could stifle India’s potential to lead in AI innovation, as talent gets trapped in a cycle of chasing hyped-up but limited technologies.

The Hype-Driven Talent Funnel

The current environment acts as a massive funnel, channeling researchers toward work that incrementally improves or applies existing LLMs. This phenomenon is driven by several factors:

Pervasive "AI Solutionism": The belief that AI, specifically LLMs, is the optimal solution for nearly every problem has become widespread. Researchers report feeling immense pressure to incorporate LLMs into their work, regardless of suitability. This extends from grant proposals and manuscript editing to more complex research tasks, creating an ecosystem where familiarity with mainstream models is a prerequisite for success.

Industry Demand and Investment: The vast majority of notable AI models are now developed by industry giants. These companies are engaged in a high-stakes race for model supremacy, pouring resources into scaling compute power, which is now seen as "the new oil". This creates a talent market where the most lucrative and high-profile opportunities often involve fine-tuning or building upon existing architectures rather than exploring fundamentally new ones

.

A Shift from Research to Implementation: The global demand for AI talent is exploding across all industries. However, much of this demand is for mid- and low-tier roles focused on integrating AI into business workflows. While essential, this emphasis on application can overshadow the need for the top-tier research talent dedicated to foundational R&D.

Consequences for Research Priorities

This concentration of talent and resources on a single architectural approach has significant consequences for the direction of AI research.

Neglect of Fundamental Flaws: Despite their impressive capabilities, current LLMs still struggle with core challenges like complex reasoning, factuality, and bias. The relentless pursuit of scale often sidelines the work needed to address these foundational weaknesses. The "scaling laws"—the idea that bigger models and more data will solve all problems—are being questioned as returns diminish and new issues arise.

Sidelining Alternative Architectures: The hype around LLMs has drawn attention away from other promising research paths. Organisations are now recognising that a "one-size-fits-all" approach is inefficient and are beginning to explore a proliferation of smaller, more specialised models tailored for specific tasks. This shift suggests a growing awareness that the future of AI will likely involve a diverse portfolio of models, not just ever-larger LLMs.

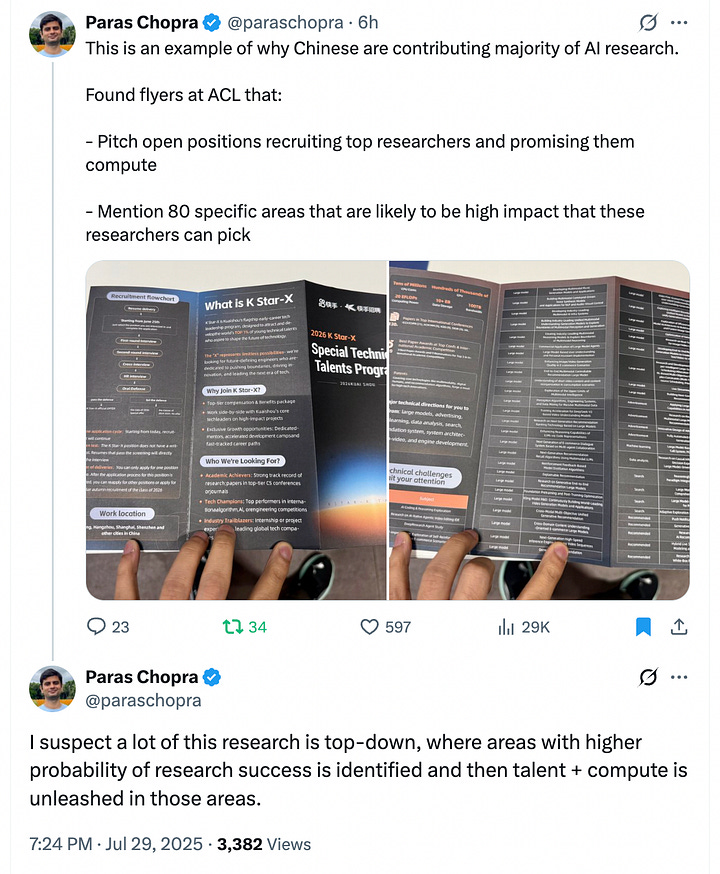

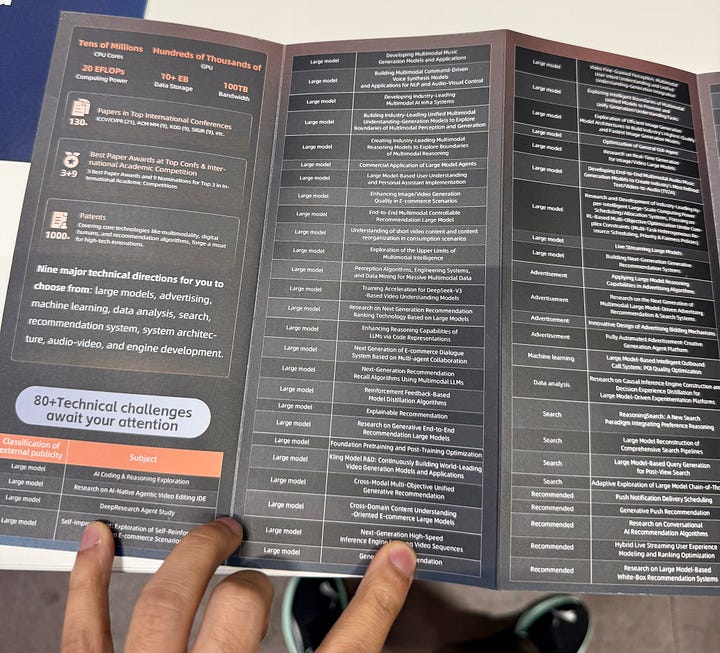

This is why I found

’s recent post on X pretty interesting (check the source link and screenshots above). What he implies is that Chinese AI researchers are contributing a majority of global AI research efforts, because:They “mention 80 specific areas that are likely to be high impact that these researchers can pick”, which is definitely not normal.

In addition, “a lot of this research is top-down, where areas with higher probability of research success is identified and then talent + compute is unleashed in those areas”.

Now, from cinema and animation to content creation, book authoring, and even car manufacturing, we see the same pattern: roles stripped of creativity and reduced to mechanical tasks.

The "human touch"—the empathy, nuance, and critical thinking that define meaningful work—is consistently undervalued, and it’s time we recognise this for what it is: a systemic choice, not an inevitable consequence of technology all the time.

Third, AI is shaping the way we make money-related decisions which can affect future of work, even if it has nothing to do with AI Adoption workflows.

A Zerodha article (whose excerpt I have put above) perfectly captures a crucial paradox: AI is already reshaping financial and career decisions through uncertainty and hype, rather than through actual technological deployment or clear adoption workflows.

The Decision-Making Distortion Effect

The content reveals a striking phenomenon where the very inability to predict AI's impact is becoming a decision-making factor. When expert predictions range from Tyler Cowen's modest 0.5% growth estimate to Dario Amodei's 10%+ projection, this uncertainty itself becomes information that people use to make financial choices.

Consider the psychological impact: if there's even a remote chance that AI could deliver "civilisational transformation," rational actors might:

Hedge their bets by investing in AI-adjacent skills or companies

Accelerate career pivots away from potentially automatable roles

Shift investment portfolios toward AI-related assets

Change educational choices for themselves or their children

This happens regardless of whether AI adoption workflows actually mature or prove effective in their specific contexts.

The Incentive-Driven Prediction Machine

The Zerodha piece astutely identifies how misaligned incentives are creating a feedback loop that amplifies uncertainty:

Startup founders need bold visions to secure funding, so they promise transformative AI applications

Academic researchers require exciting hypotheses to get published and funded

Corporate executives must justify massive AI investments with equally massive potential returns

These incentives create a constant stream of conflicting signals that individuals interpret as they make personal financial decisions. Someone reading about both Goldman Sachs' 7% GDP growth prediction and Acemoglu's 1.1% estimate might reasonably conclude they need to prepare for multiple scenarios—even if they never directly interact with AI systems in their work.

Financial Behavior Without Technical Understanding

Most tellingly, the article notes that "our mental models for thinking about artificial intelligence either come from movies, sci-fi novels, futuristic predictions, or our own vivid imaginations." Yet people are making real financial decisions based on these mental models.

This creates a unique situation where:

Investment flows are shaped by AI hype rather than proven business models

Career planning incorporates AI disruption fears that may be premature or misdirected

Educational spending shifts toward AI-related skills that might not materialize as valuable

Risk assessment for traditional industries gets distorted by speculative AI replacement scenarios

The Future of Work Through a Financial Lens

The broader implication for the "future of work" is that financial decision-making patterns are already changing the labour market dynamics before AI adoption even reaches maturity. When thousands of professionals preemptively reskill, change career paths, or alter investment strategies based on uncertain AI predictions, these collective actions reshape:

Talent supply in different sectors

Compensation expectations in AI-adjacent roles

Industry valuations and capital allocation

Educational program demand and curriculum development

This phenomenon validates my earlier observation.

The uncertainty around AI's true capabilities—combined with the hype generated by scaling-focused research—is creating economic ripple effects that precede and potentially distort actual technological adoption. People are making consequential financial decisions based on incomplete information, driven by the very uncertainty that the AI research community's focus on scaling (rather than solving fundamental problems) has helped create.

Conclusion: What does all of this mean? Where do we go from here?

There are some interesting dynamics about Software Companies and their rise and fall in the last 3 decades, which is kind of addressed pretty decently by this substack by

:Here’s what Eric implies in this excellent read:

The next $100B company will not look like the last: What I think Eric meant here is that the most valuable technology companies are anomalies that create entirely new categories. Pattern-matching for the "next Google" or the "AI version of X" is a flawed strategy because it overlooks the very uniqueness that drives groundbreaking success.

Know the game you’re playing: home runs, grand slams, or something more like Space Jam for Baseball (aiming for outer space): Flaningam makes a brilliant distinction between different types of tech investments: consumer companies are often "grand slams" with winner-take-all dynamics, while enterprise software offers more predictable "home runs". The current AI ecosystem, with its emphasis on "LLM wrappers" and enterprise applications, is largely playing for "home runs." It's a safer, more predictable game. However, this de-risked approach diverts attention and resources from the "grand slam" bets on foundational research—like neurosymbolic AI or entirely new architectures—that could solve the core flaws of current models.

Software is like chicken, 80% of it tastes the same: This is something even I had realised 2 years ago, and I am happy Liam mentioned it as it is. The argument that today's "LLM wrappers" are no different from the "SQL wrappers" of the last decade is a crucial insight. It suggests that much of the work in the AI application layer isn't deep R&D but rather re-packaging a core technology. This is precisely the kind of low-impact, incremental work you were concerned about, where top talent might be misallocated to building what is essentially another "chicken dish" instead of inventing a new form of sustenance.

“Market size” may be the single greatest reason for investors missing great companies: The article highlights that investors frequently miss the biggest winners because they try to size a market that doesn't exist yet. Companies like Uber, Shopify, and Palantir didn't capture an existing market; they created a new one, making initial market-size estimates irrelevant. The fixation on known markets and predictable growth models (the "home run" strategy) leads to a systemic underestimation of disruptive ideas. Financial decisions, from VC funding to individual career choices, become anchored to what is known, creating a feedback loop that starves genuinely novel ideas of resources and talent.

Companies resemble the technology waves they ride in on: The ultimate question is not "How can we make LLMs bigger?" but "What new kinds of companies and markets does AI now make possible?" The historical lens shows that the greatest value is created by those who answer this "why now?" question with a novel vision. The risk for the entire ecosystem, from researchers in India to VCs in Silicon Valley, is getting so caught up in the mechanics of the current wave (scaling models) that they fail to build the unique ships that can ride it to a new world.

That’s it. I have no actionable recommendations, because I am just creatively asserting some insights of mine which are validated by the wisdom of so many people who I have cited. As someone who has published 40+ publications including those through Springer, ORF, Forum of Federations and others - I am sure you may like this input. The things I have discussed are more than enough for India to consider how to address AI + future of work issues very seriously.

Check my latest Linkedin post as well on the geopolitics and tech sovereignty side of things as well: https://www.linkedin.com/posts/abhivardhan_one-of-the-biggest-reasons-why-recommendation-activity-7355903610754387968-tRMO?utm_source=share&utm_medium=member_desktop&rcm=ACoAAB3bBjMB8v_lDnQkP5VGc3DVHLlJvJELCGU